Fall 2022 ROS-I Community Meeting Happenings

/The ROS-Industrial Community Meeting was held on Wednesday, 9/21, and it brought with it a few interesting updates for the Industrial ROS community, but also the broader ROS/open source robotics community as well. I wanted to take this moment to highlight some key bits that came out of this specific community meeting and provide the links to the provided presentations. Typically, I add these to the event agenda, but due to some changes in how the meeting unfolded, I figured a blog post may be easier to track down.

If you just want the specific decks from the speakers, I have included them here: (YouTube Playlist for your viewing pleasure HERE!)

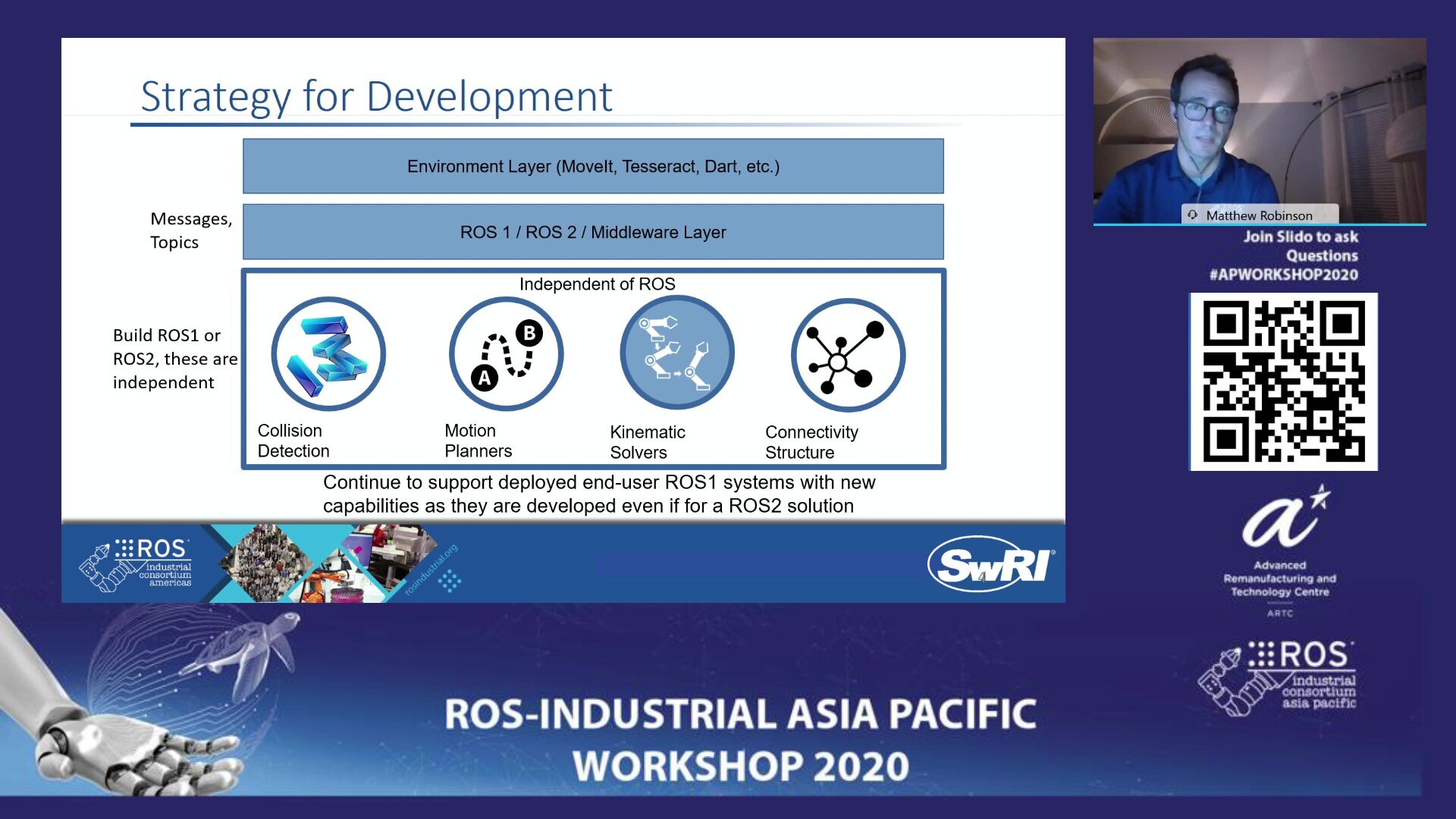

- ROS-Industrial Program and Technical Updates – Matt Robinson & Michael Ripperger, ROS-I/SwRI

- Intel RealSense – Recent Releases and Updates on Driver Support – Brad Suessmith and Oded Dubovsky, Intel

- NIST Introduction on Robotics Agility – Craig Schlenoff, NIST

- Update on the ConnTact Framework for Agile Assembly – John Bonnin, SwRI

- MoveIt & MoveIt Studio Updates and What’s Coming – Dave Coleman, PickNik

Per typical ROS-I meetings, it started with an update on general ROS-I open source project/program updates. A fair amount of time was spent on ROS-Industrial training. It is no secret that during COVID we had to go virtual, and that certainly made training more available to more people. As in person events started to become possible again, we experimented with delivering training in a "hybrid" fashion, with students able to opt to attend remotely.

The feedback has not been very positive. For attendees online, they feel they do not get the same value as those in the room, and often are left on their own more so than those getting in person guidance from the instructors.

The solution to this moving forward is to no longer offer hybrid, but periodically offer a full virtual training option for those that do not want to attend an in person event.

That said, with in person events being broadly the norm now, we have brought back member hosted training, so that provides improved regional opportunities to make a training event where a near cross-country flight is not required. We look forward to continuing to offer member hosted events, covering both costs and the Midwest.

I also did a preview into some of the data we received from the annual meeting on what we can do to improve adoption of ROS across the Industrial robotics community. It isn't quite a Venn diagram, as shown below, but ease of use and training contribute to roadblocks to adoption. We are continuing to line this up with both past workshop feedback data, and process domain specific feedback to provide an updated roadmap for the ROS-I open source project. Stay tuned!

Initial digestion of workshop feedback from ROS-I Annual Meeting

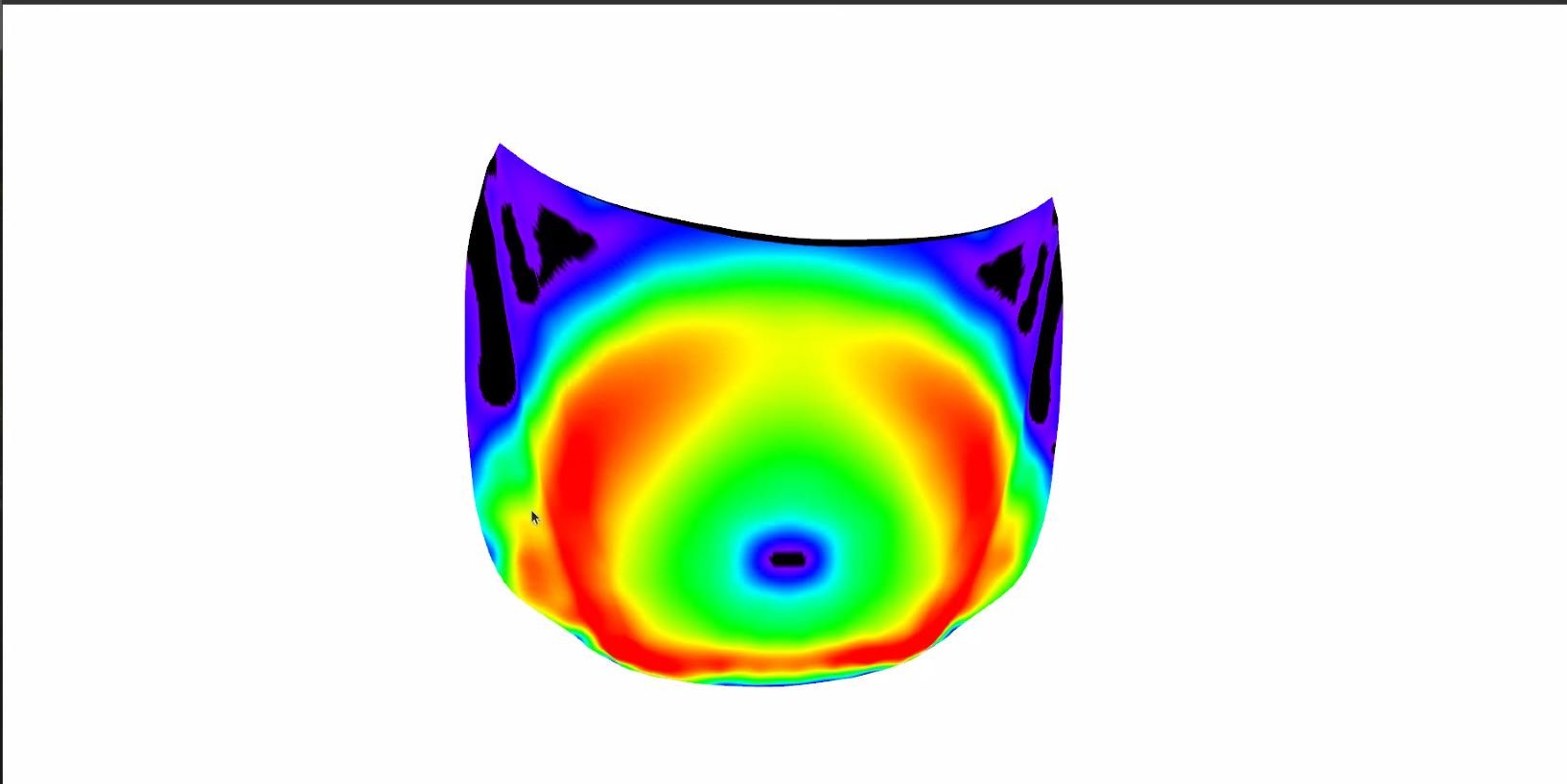

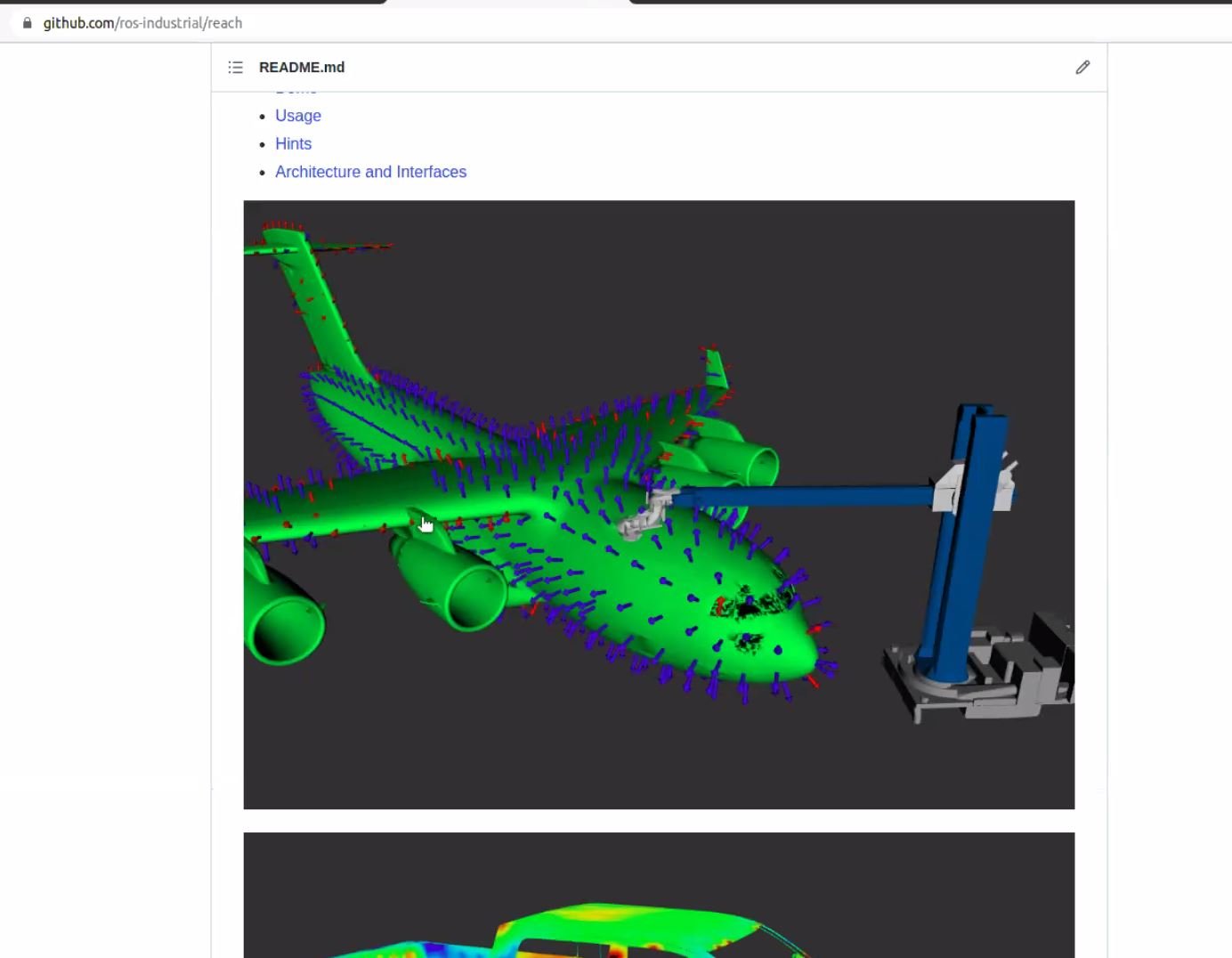

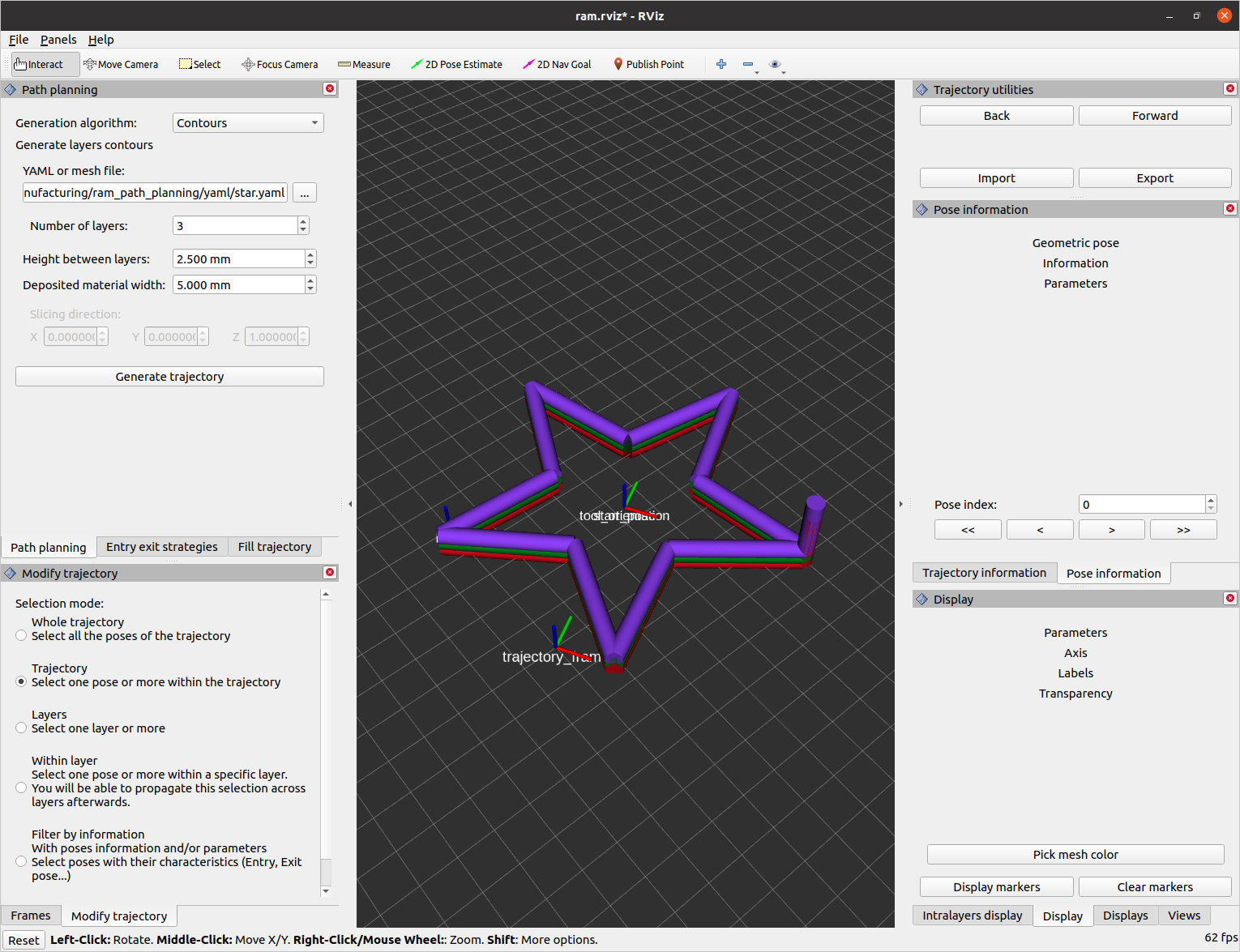

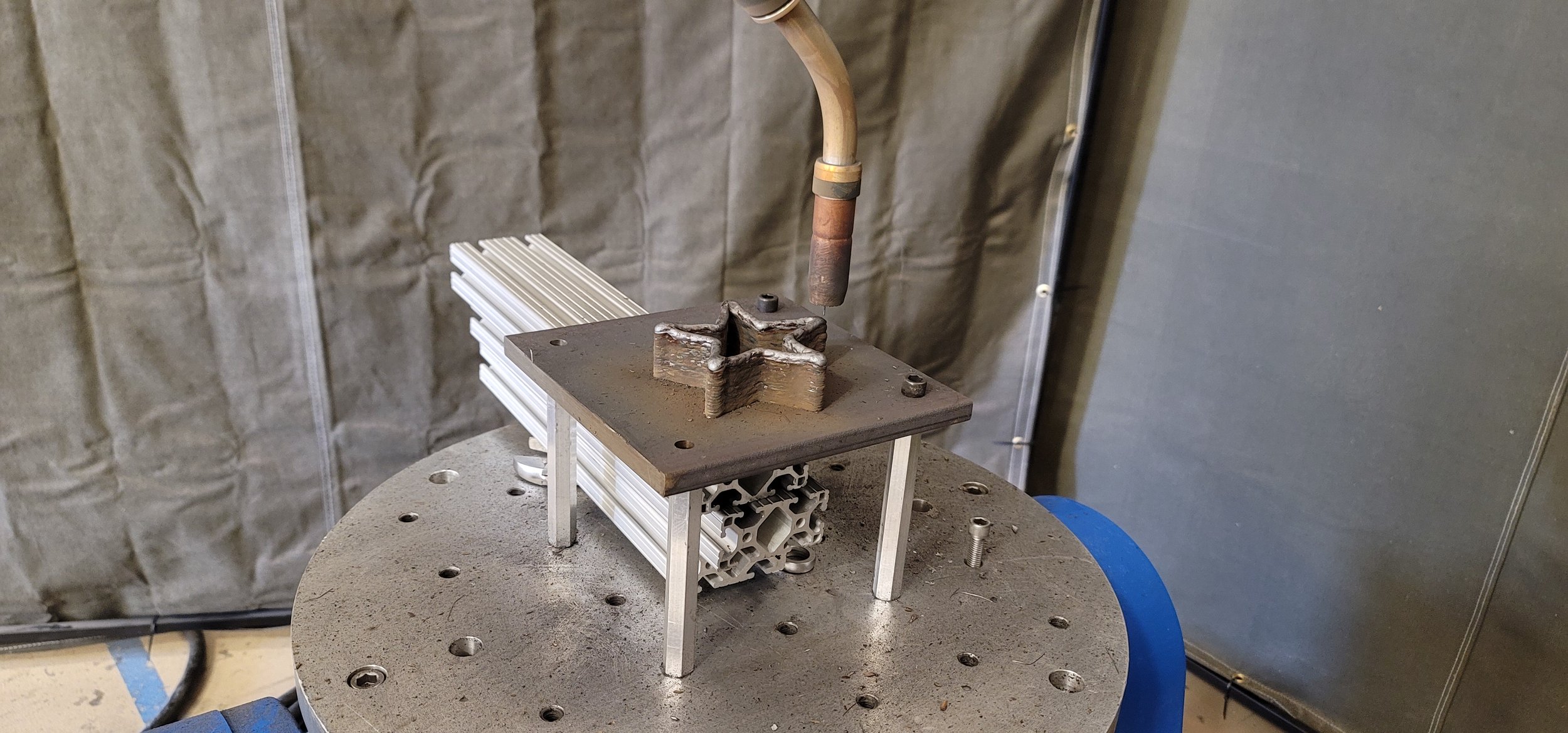

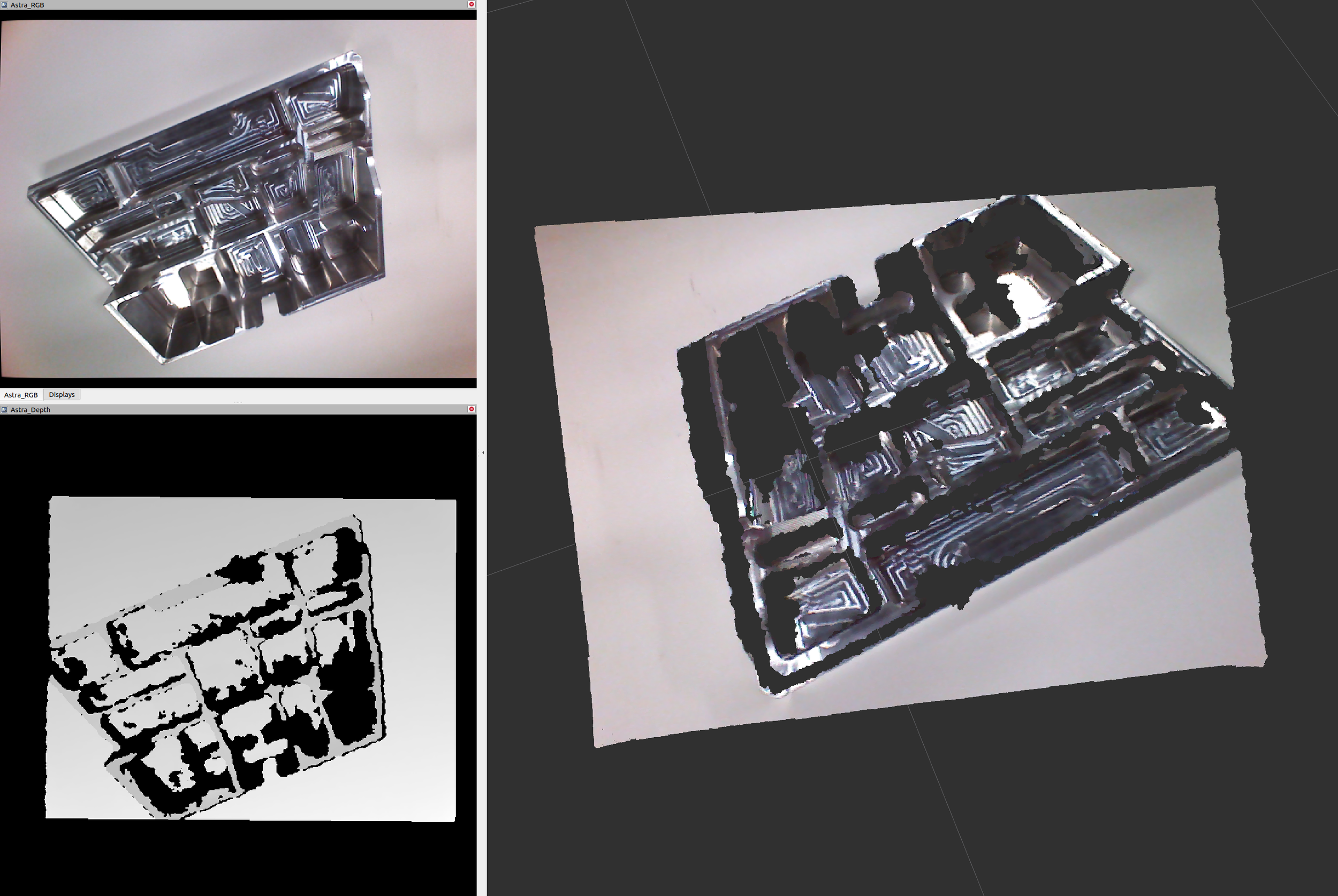

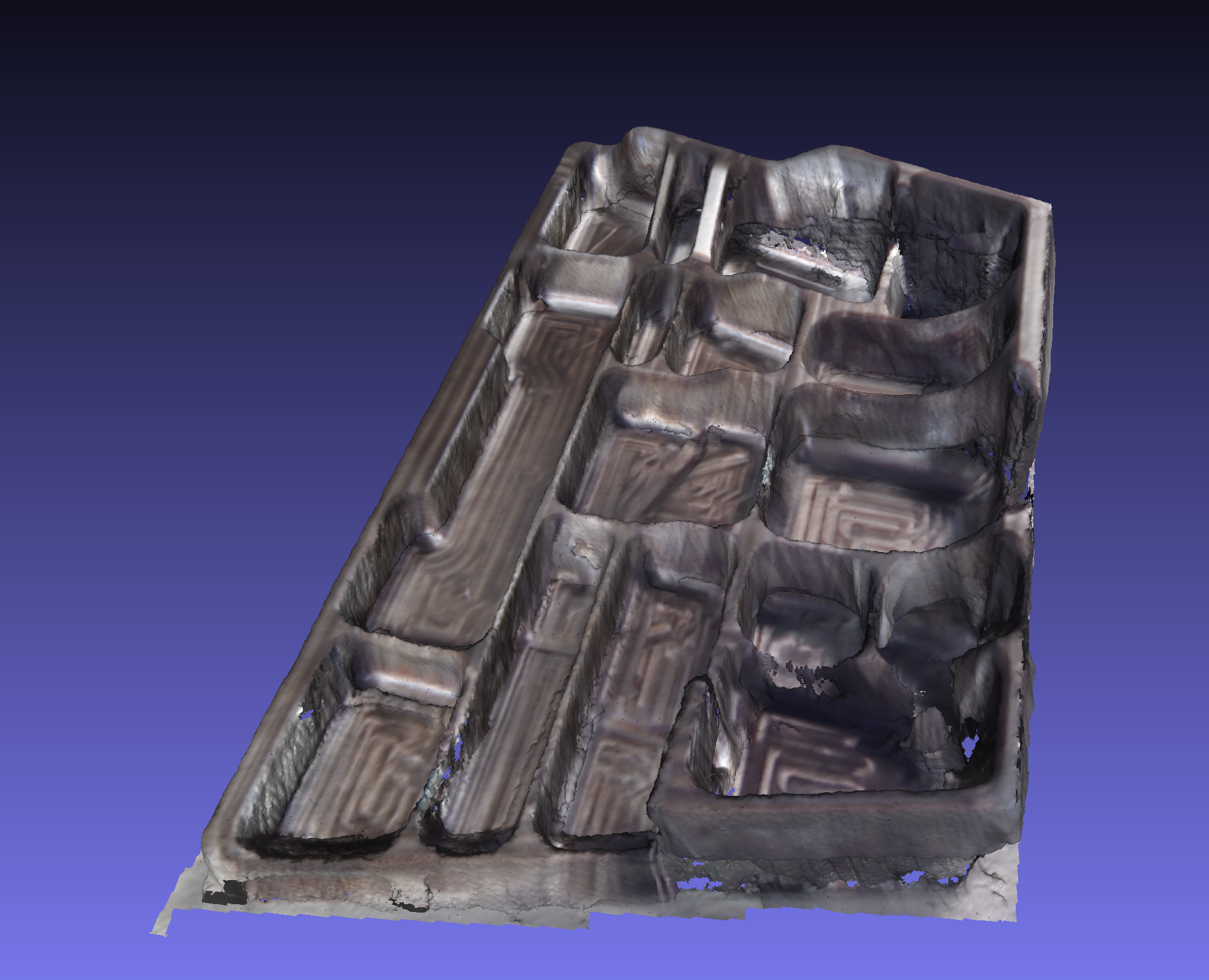

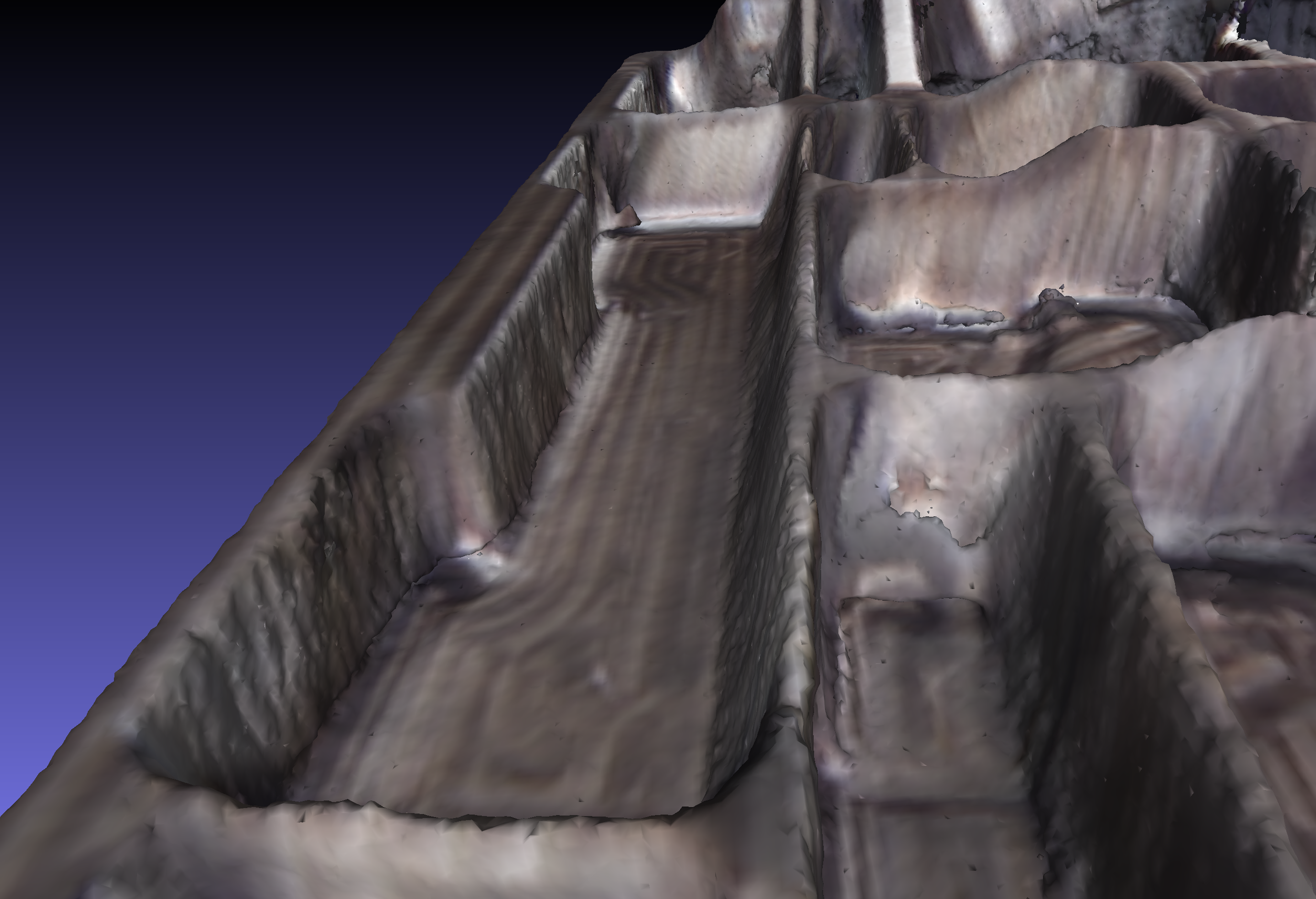

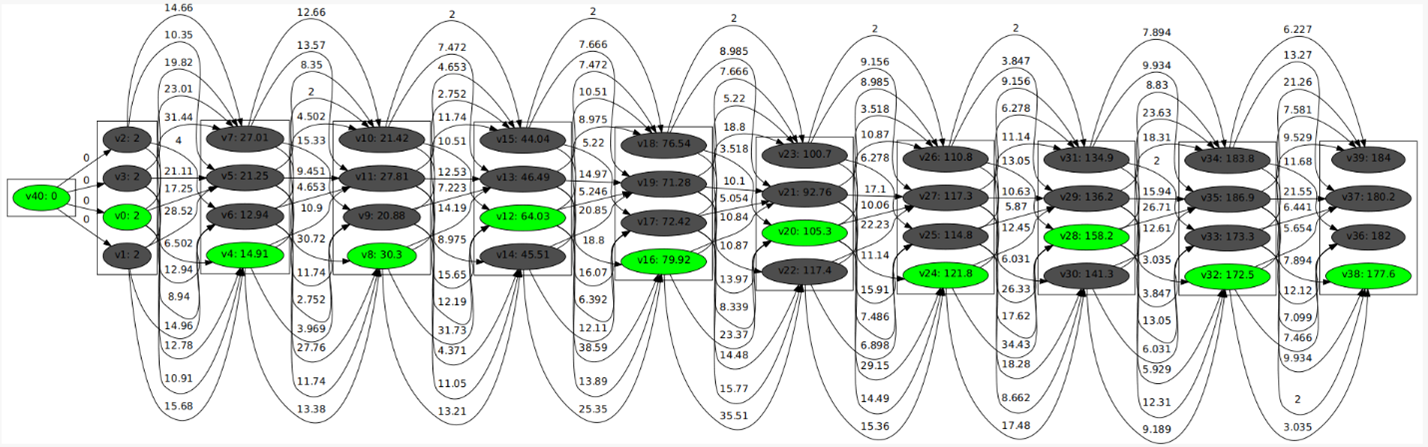

From here Michael Ripperger, ROS-I Tech Lead shared some interesting updates around the Reach repository and some of the new scoring features to assess reachability for a manipulator configuration relative to a surface that is being targeted to process. These utilities have been refined to enable richer displays to enable analysis and improved solution design for various applications from painting, through surface finishing. The updated repository may be found here. Also, recent improvements to industrial reconstruction were noted, as some various users across the community kick the tires and provide feedback.

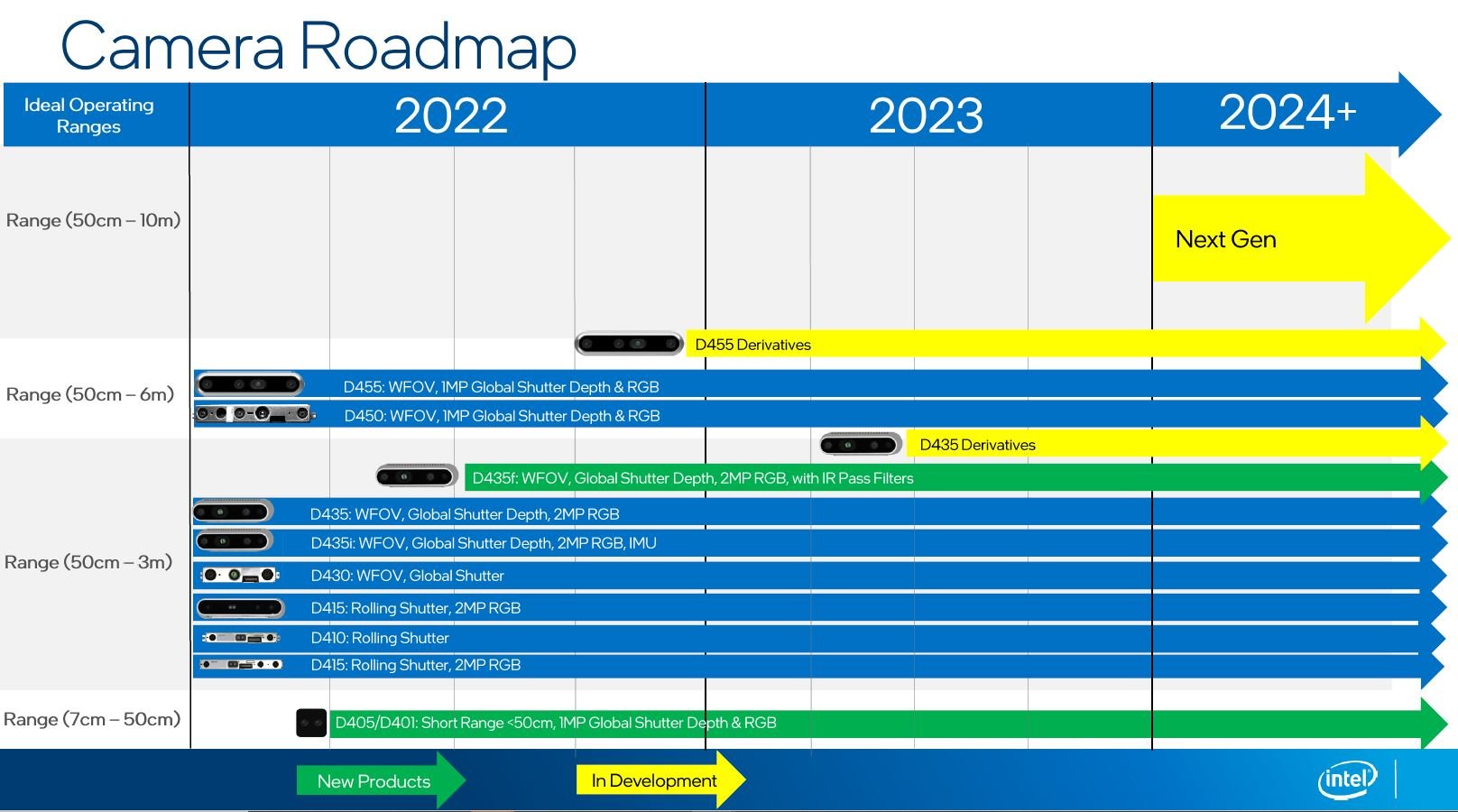

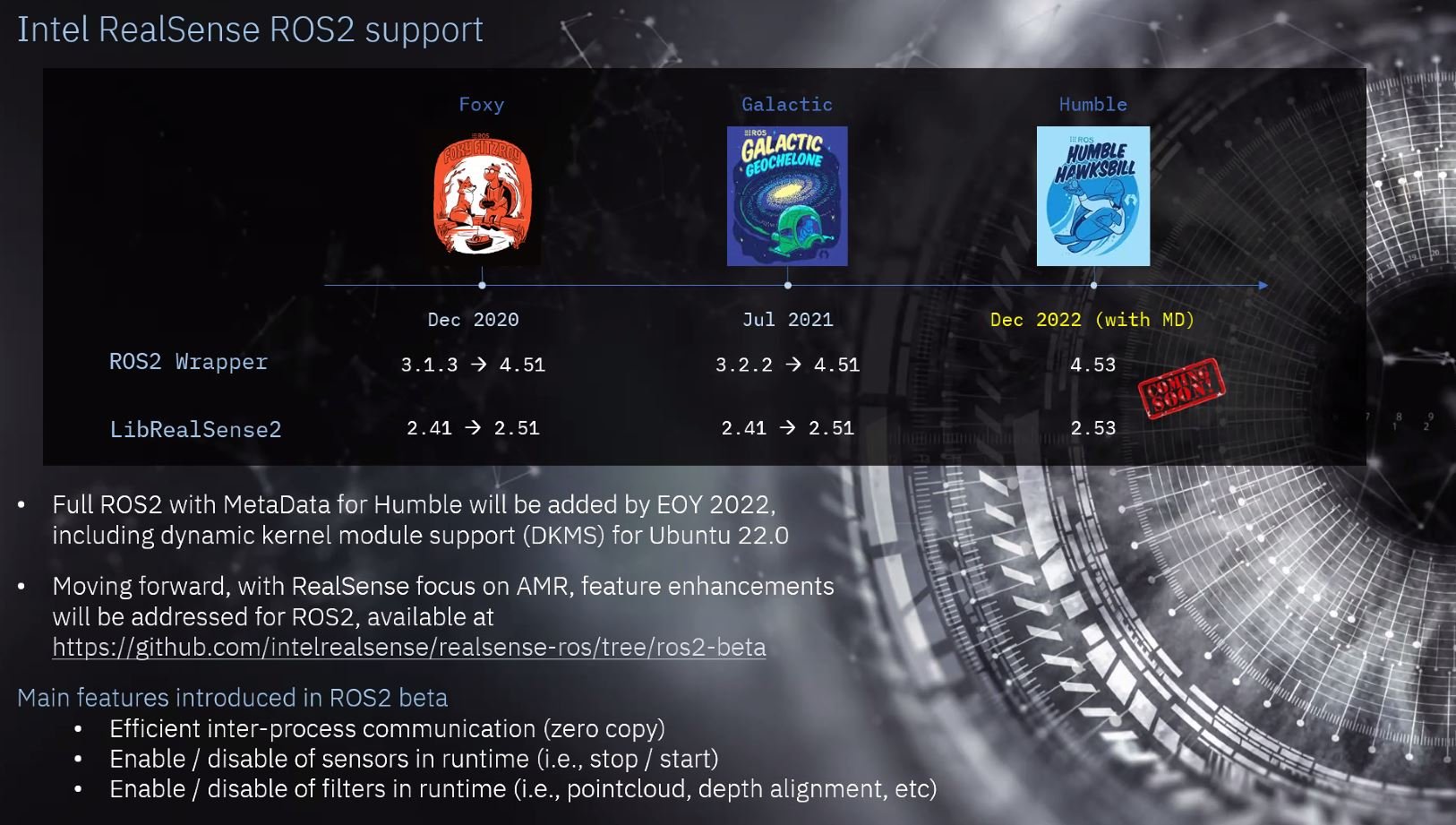

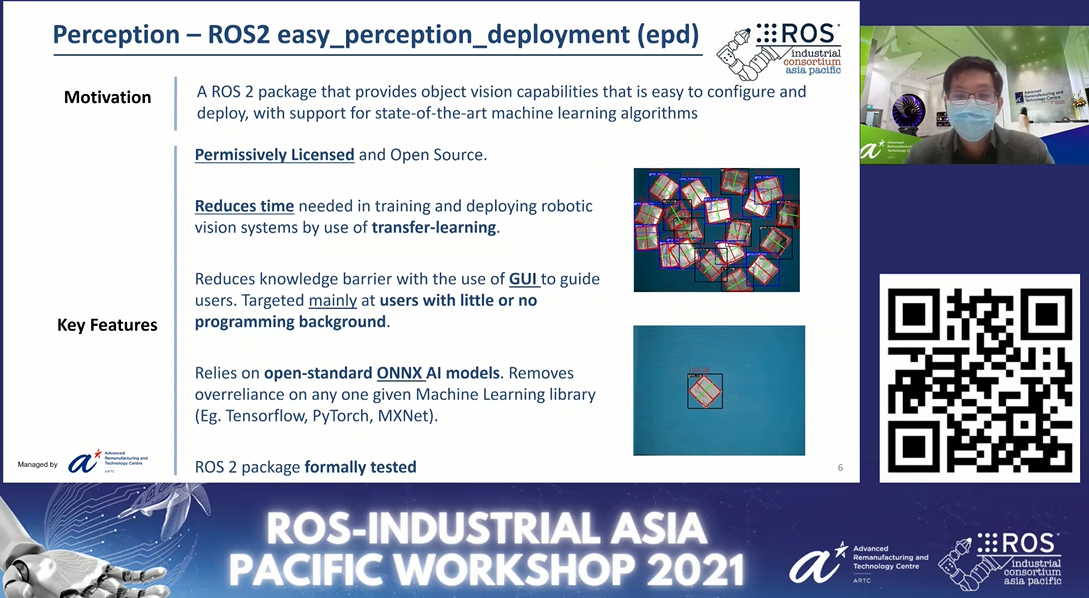

Brad Suessmith and Oded Dubovsky from Intel provided an update on the Intel RealSense product lineup, what is on the horizon and when releases will be announced. Stay tuned for a splashy announcement soon in concert with vision shows. Oded covered the roadmap for the ROS 2 driver with a big push on ROS 2 beta by end of year 2022.

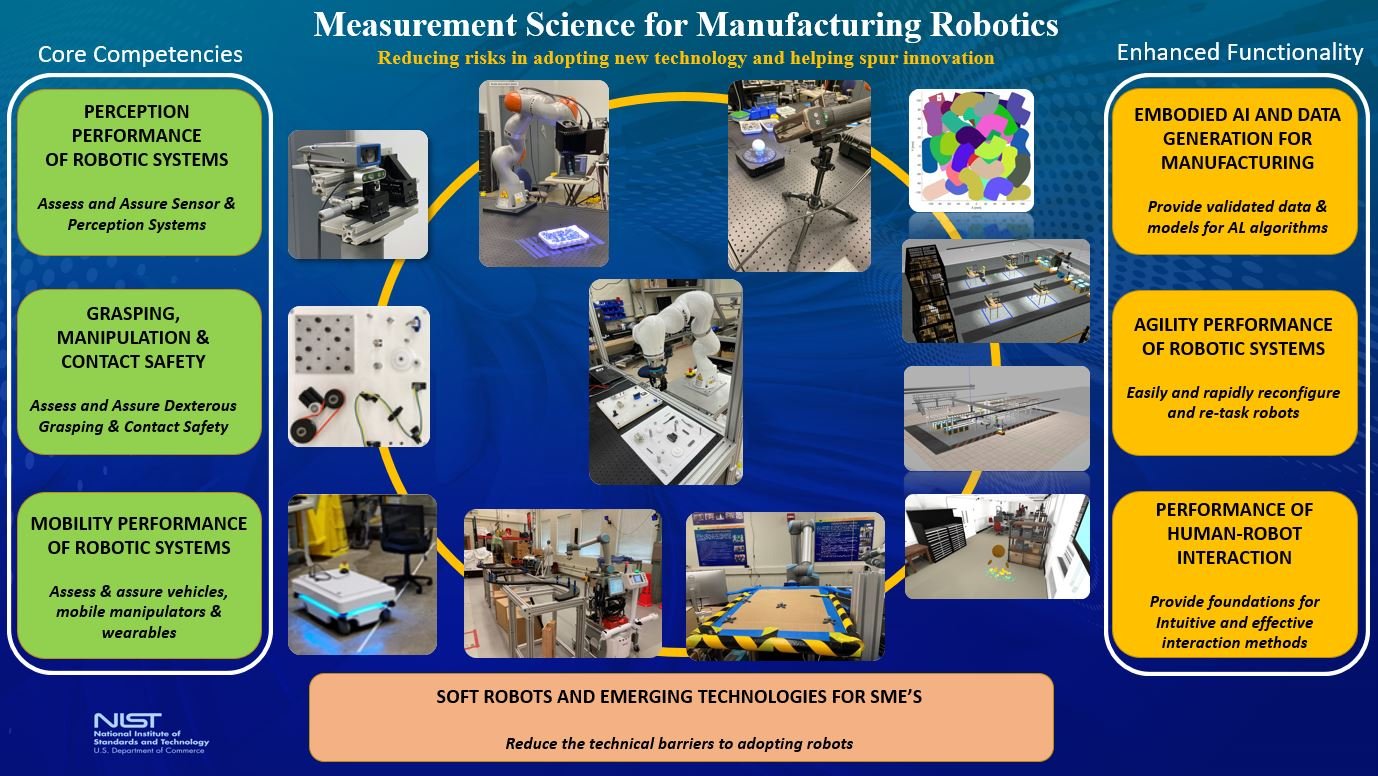

Craig Schlenoff reviewed the NIST role in participating and even funding work around open source robotics. The particular focus for this particular talk was on the ability to realize agile performance within robotic systems. The key idea is to enable easy and rapid reconfiguration and re-tasking of robotic systems. This has been broken into both hardware and software agility and Craig summarized why this is of interest and how it fits into the NIST vision relative to dissemination of standards and practices that support sustainable capability development and proliferation. You can also check out the recently launched Manufacturing AI site that seeks to provide a landing page for information around AI development for manufacturing.

NIST high-level view of Measurement Science for Manufacturing Robotics

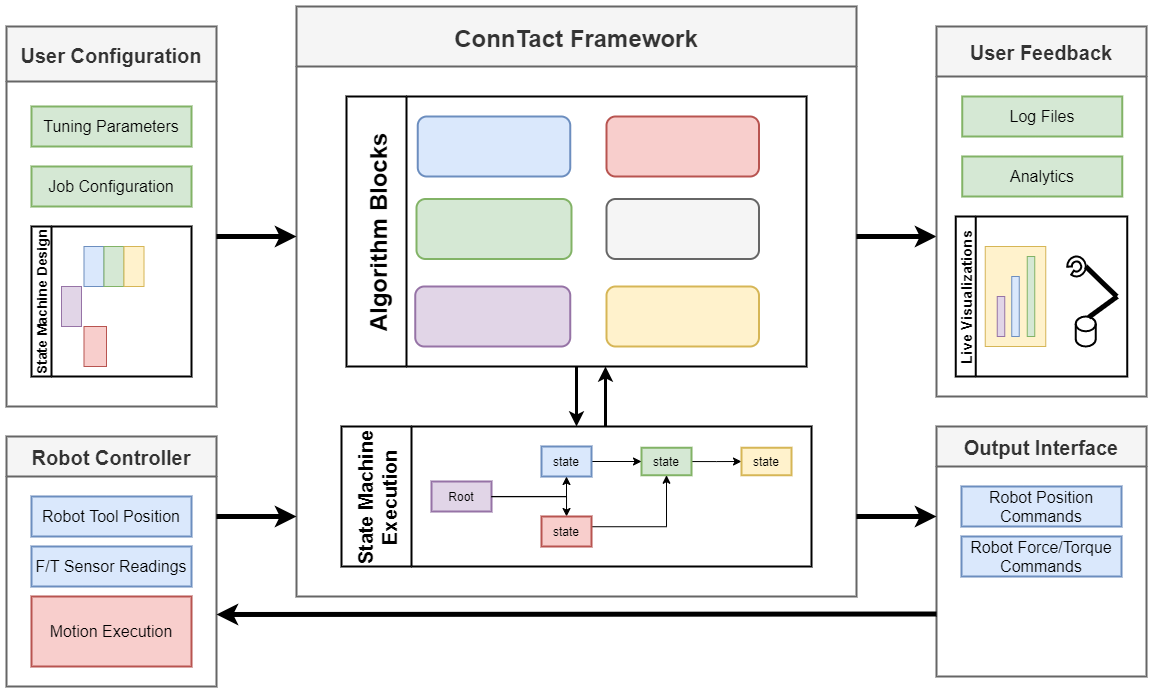

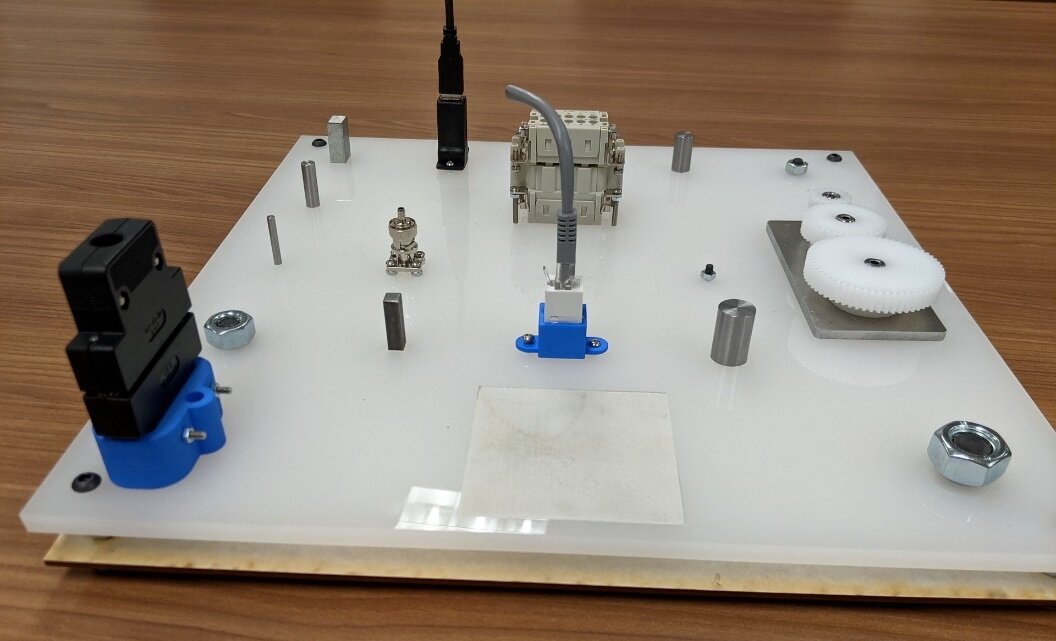

John Bonnin, from SwRI, then did a dive into the ConnTact framework. Here, the intent is to provide a way to easily evaluate learning policies around various assembly tasks, initially targeting the NIST assembly task board, in a way that abstracts details around specific hardware, while enabling easy, or simpler, task definition. The framework has been further refactored and in parallel is a port to ROS 2. The community is encouraged to check it the repository and engage in the improvement of this framework!

Updated schematic of the ConnTact Framework

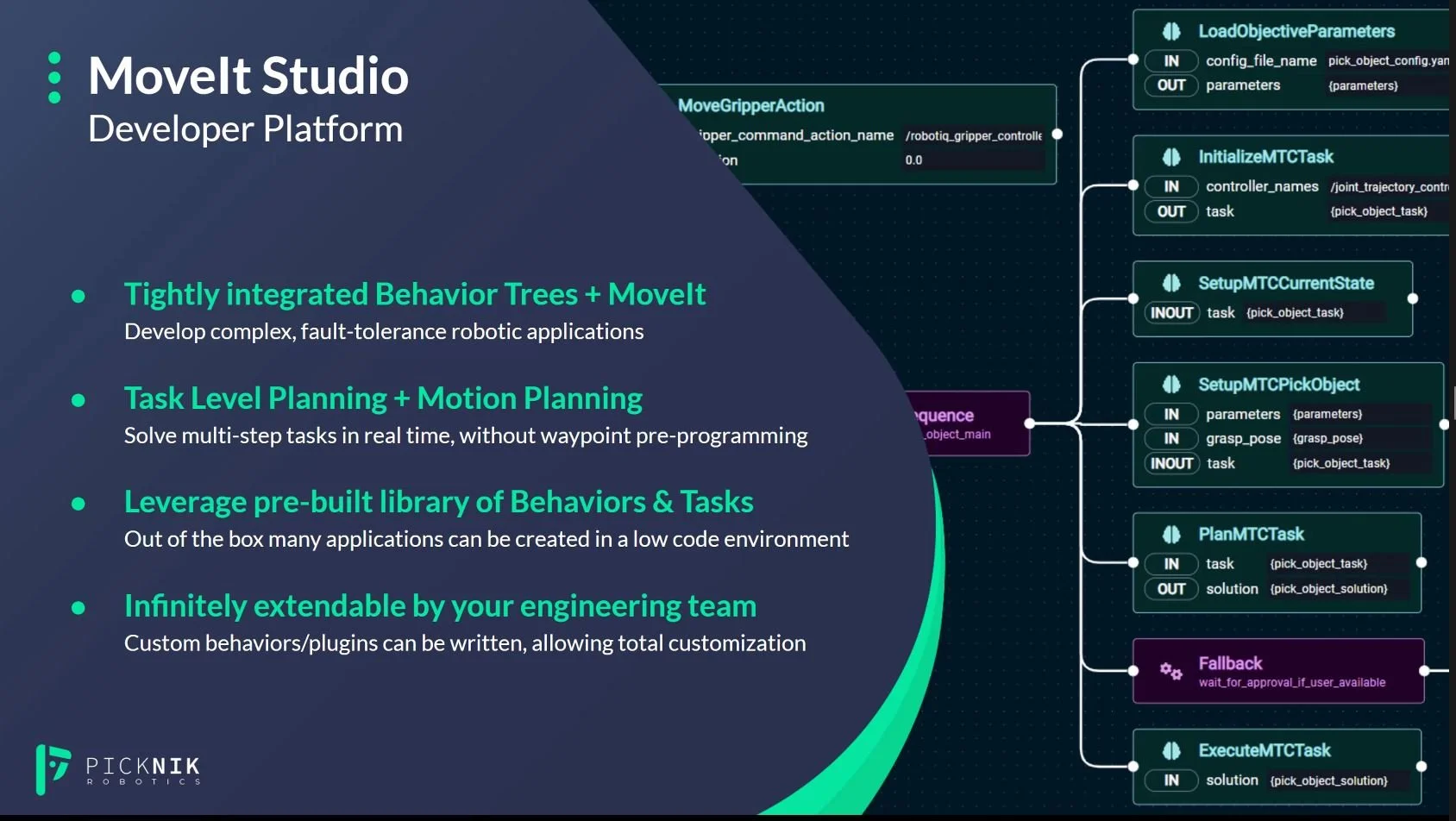

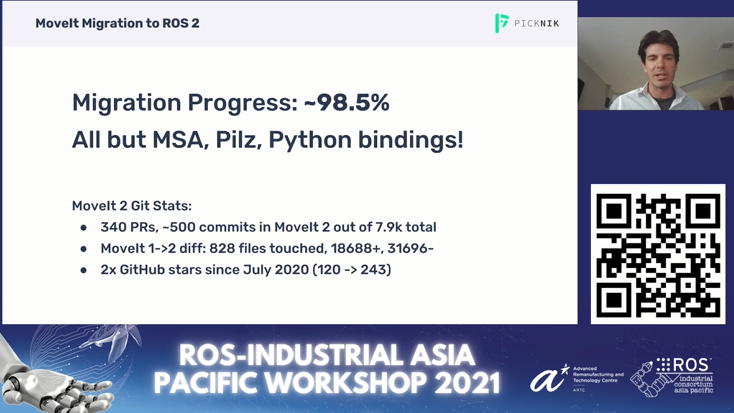

PickNik CEO, Dave Coleman, dug into MoveIt (reviewed the roadmap, great things coming!) and MoveIt Studio. For those that haven't maybe had the chance to see MoveIt Studio in action, the intent is to provide a platform to enable the development of complex yet fault tolerant robotic applications. While the experience is simpler, and reduces reliance on high-end experts, there is still a certain level of expertise required, however, there is the potential and ability to build and debug advanced applications and get to the validation phase of your application sooner.

There is also cloud-based/remote monitoring and error recovery. This enables developers for solutions to think about recovery plans, support in the field, get applications up and running after a fault more efficiently. This also has the benefit of enabling diversely located development staff, all via the PickNik collaboration with Formant.

Summary of MoveIt Studio

Dave also exectued a live demo of the MoveIt Supervisor. The MoveIt Supervisor enables operator in the loop type applications, suitable for object/task identification in the scene to set up execution in a cluttered, or high noise environment. This is a great example of supervised autonomy, where things are "mostly automated". The demo went off without a hitch, as Dave stepped through identifying a door handle, planning the trajectories for opening the door handle, and then the plan executed, and the robot opened the door.

MoveIt Supervisor Demonstration

Dave reviewed the behavior tree behavior user interface. Built on BehaviorTree.cpp this enables for complex behavior development, but in an environment that makes the task simple to visualize and edit.

Coming soon will be updates around trajectory introspection, PRM graph planning caching, and optimal trajectory tuning. MoveIt Studio is available through PickNik via a licensing model. Check in with the PickNik team to learn more about MoveIt Studio and how to check it out for yourself!

This ended up being a great community meeting and we look forward to the next community update in December 2022. It has been rewarding to see both large tech companies, government agencies, and small innovative startups, march together in providing resources, tools and capability to enable new capabilities in manufacturing and industry. That is the goal of the ROS-Industrial open source project, and we look forward to what's next!