2025 Americas Annual Meeting - Sharing and Fostering Richer Collaboration

/The ROS-Industrial Consortium Americas Annual Meeting 2025 was recently held and designed to gather Consortium members and stakeholders to review recent advancements, share initiatives, and discuss the strategic direction of the ROS-Industrial open source project. The meeting included a combination of presentations, workshops, and discussions aimed at promoting the growth and development of the ROS-Industrial project’s resources and to broaden community and stakeholder engagement. This meeting was the capstone to a full week of networking including a social meetup and welcome dinner.

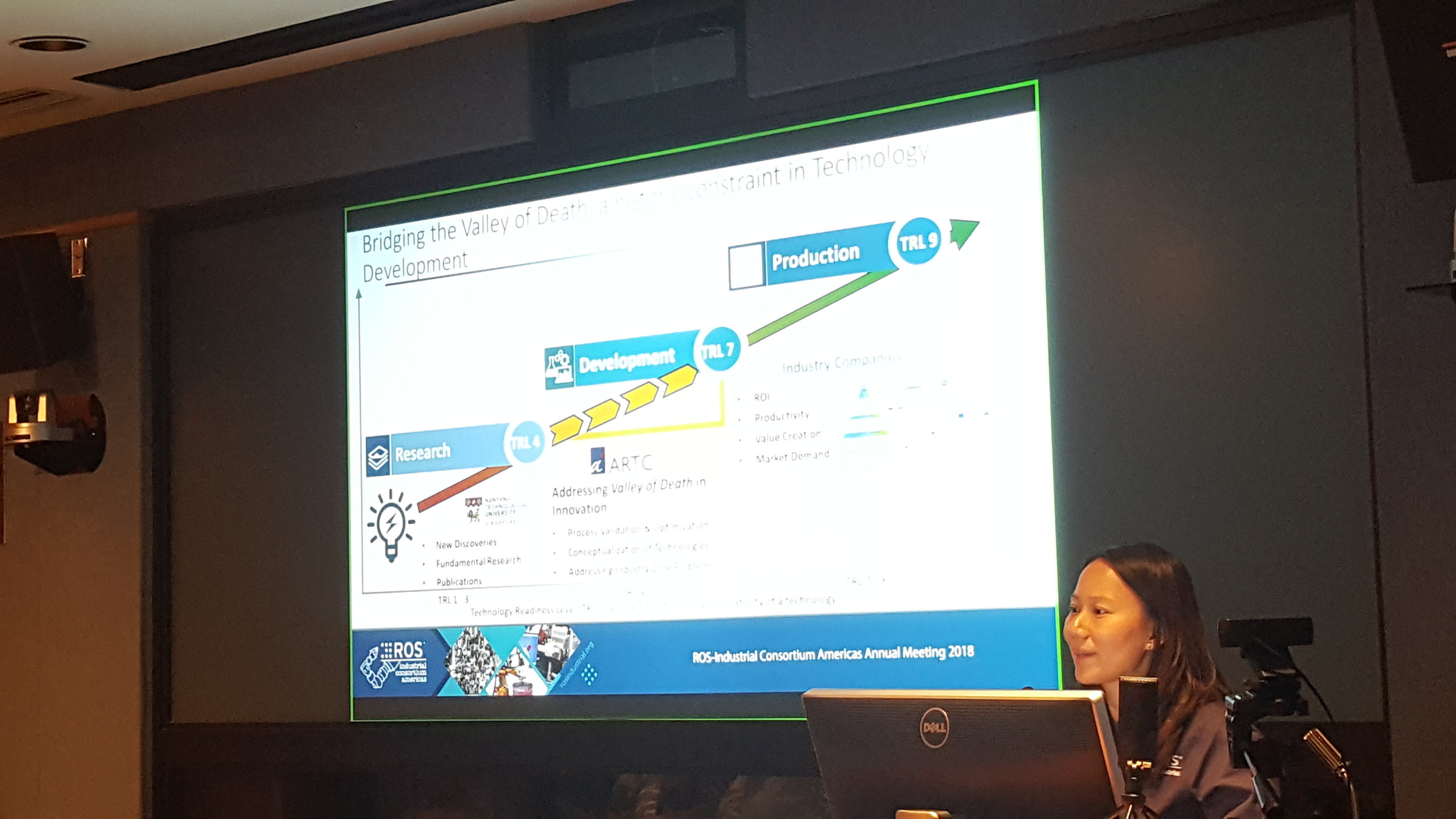

The annual meeting began with a welcome address and a state-of-the-consortium presentation by Matt Robinson, Program Manager, & Michael Ripperger, Technical Lead for the ROS-I Consortium Americas at SwRI. They provided updates on the latest developments, resources provided, and upcoming initiatives aligned with the Consortium's objectives. From here overviews of recent developments by the ROS-I Consortium European Union and ROS-I Consortium Asia-Pacific (AP) were shared. Yasmine Makkaoui, Scientific Coordinator with Fraunhofer IPA detailed updates for ROS 2 support and documentation to assist robotic manipulator providers in creation of ROS 2 hardware interfaces. Maria Vergo of Advanced Remanufacturing Technology Centre (ARTC) shared the ROS-I Consortium AP contributions around Open RMF 2.0 and recent successes in collaboration with their membership.

From here collaborators on key core open source projects, advanced robotics tool sets and funding robotics programs shared their latest developments. Kat Scott from Intrinsic provided the latest updates related to the ROS 2 Kilted Kaiju release, which contains a lot of new features including Zenoh as a Tier 1 RMW, and an improved RCLPy that contains a new events executor that has improved performance.

Dave Grant, CEO, and Dave Coleman Chief Prodcut Officer & Founder of PickNik Robotics, provided updates around MoveIt Pro and the new capabilities they are putting into production. Dave Coleman also spent time sharing the decisions around PickNik support for MoveIt 2, which has been limited in recent months as their team has focused on MoveIt Pro. A community wide effort is ongoing to build up support for MoveIt 2 and PickNick has recently put out a call for additional MoveIt 2 support. We look forward to collaborating with PickNik and the broader open source community to keep MoveIt 2 strong and healthy!

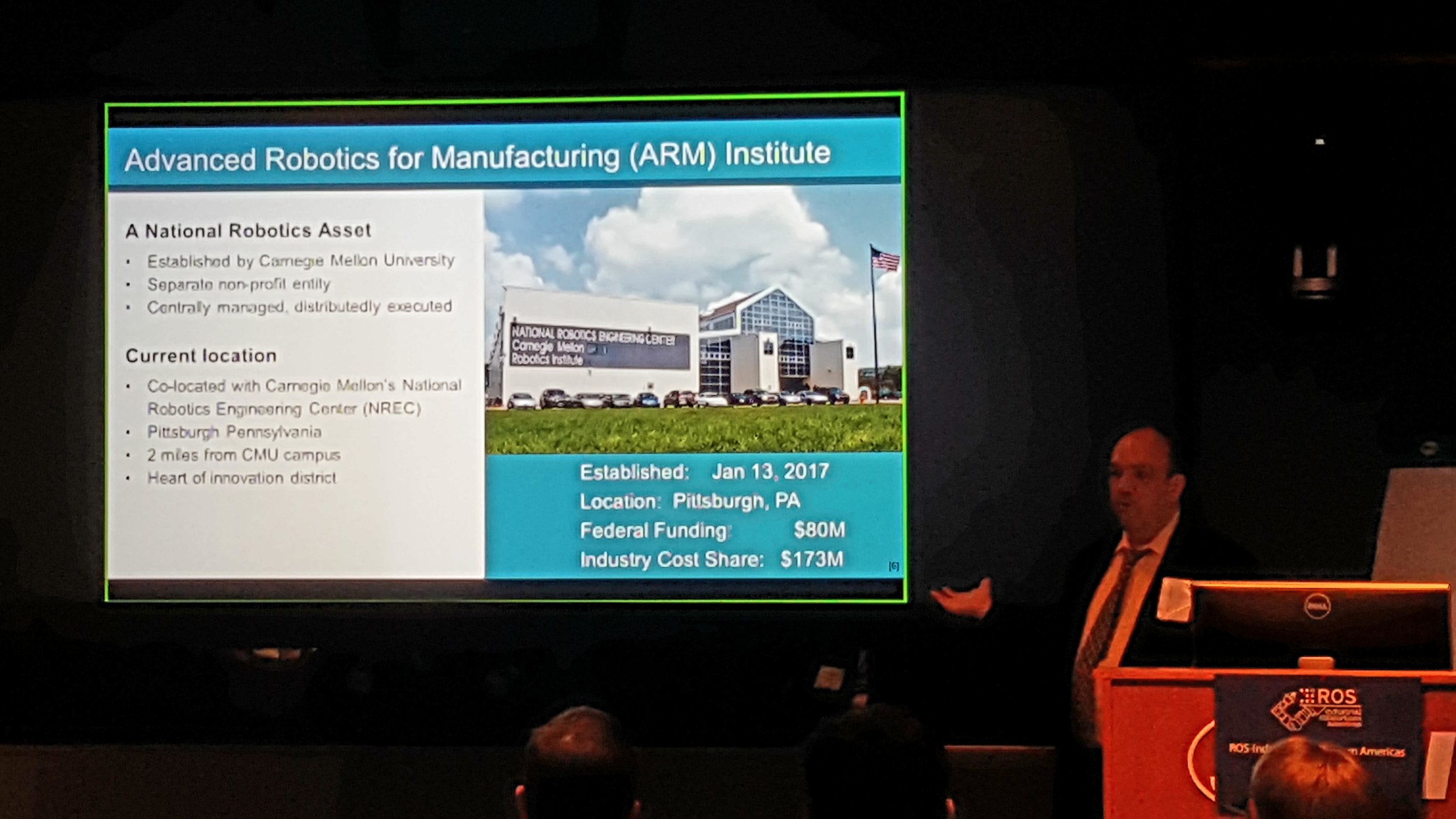

Finally, the morning session wrapped up with Miguel Rodriguez, Programs Manager at the ARM Institute, the latest programs and opportunities for collaboration within the ARM ecosystem. They also provided additional opportunities, or project calls, around Artificial Intelligence and Casting and Foundry solutions which week to provide funding to address core gaps in the industrial robotics space.

The keynote for the day was provided by Andrew Roberts of Spirit AeroSystems, where he shared the Spirit story around bringing ROS and now ROS 2, to their collaborating universities and how they manage sustaining education that is relevant to their future automation goals that leverage ROS 2. Via internships and collaboration with Wichita State University’s National Institute for Aviation Research (NIAR), they are encouraging a university provided pipeline or ROS-ready students to continue their advanced robotics journey.

For the afternoon, several additional insightful presentations followed, including:

- Prof. Alberto Munoz from EIC-Tec de Monterrey, who discussed "The Tec de Monterrey Model for Training the Next Generation of Engineers," emphasizing the importance of education in advancing robotics.

- Logan McNeil, Applications Engineer at EWI, who presented on "The Challenges of Robot and Process Agnostic Methodologies for Convergent Manufacturing Applications”, diving into the details around challenges for true interoperability in the context of welding.

- Eric Lattas, Staff Engineer at Fanuc, who introduced the "FANUC ROS 2 Driver," showcasing the integration and capabilities of ROS 2 within Fanuc systems.

- Max Falcone, VP of Sales Engineering at PushCorp, who contributed to the discussion on industry challenges and solutions, on how PushCorp is providing more tools in their hardware to enable next generation finishing capabilties.

The meeting also included rotating workshops focusing on critical areas such as the ROS-I Roadmap and Prioritization, and the efficient leverage of developed resources for better utilization by OEMs. These workshops aimed to:

- Review and throw rocks at the ROS-I Roadmap to align it better with the Consortium's goals.

- Identify ways to improve the use and leverage of developed resources to enhance efficiency.

The day concluded with a summary of workshop outputs and closing remarks, reiterating the importance of active engagement from members and the community to realize the full potential of ROS-Industrial.

The whiteboard outputs from the workshops held at RIC Amercias annual meeting 2025

Immediate actions following the meeting include:

- Continued engagement in ROS-Industrial events and regional meetings throughout the year.

- Sharing the insights and feedback gained from the meeting within members' organizations.

- Participation in collaborative efforts to address identified challenges and advance the strategic initiatives discussed during the event.

Overall, the ROS-Industrial Consortium Americas Annual Meeting 2025 provided a platform for meaningful exchanges and set the stage for future advancements in industrial robotics through a collaborative community effort.

Recordings for the various talks may be found in a playlist on the ROS-I YouTube channel. Thanks to everyone that has suppported open source for industry!

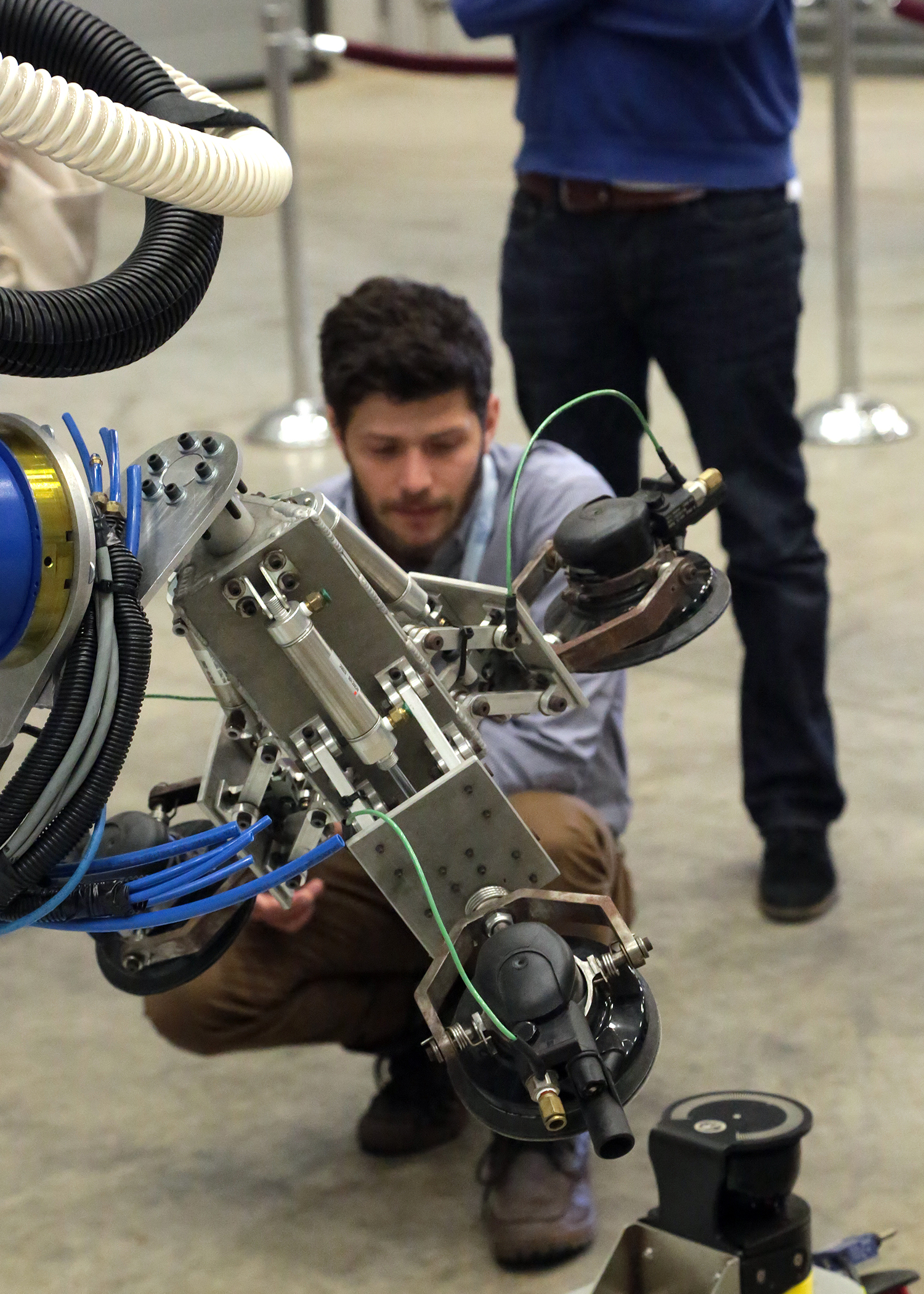

![20190506_142557[1].jpg](https://images.squarespace-cdn.com/content/v1/51df34b1e4b08840dcfd2841/1557762581422-SXQ6AJGMPZ2QCGMTQUGW/20190506_142557%5B1%5D.jpg)

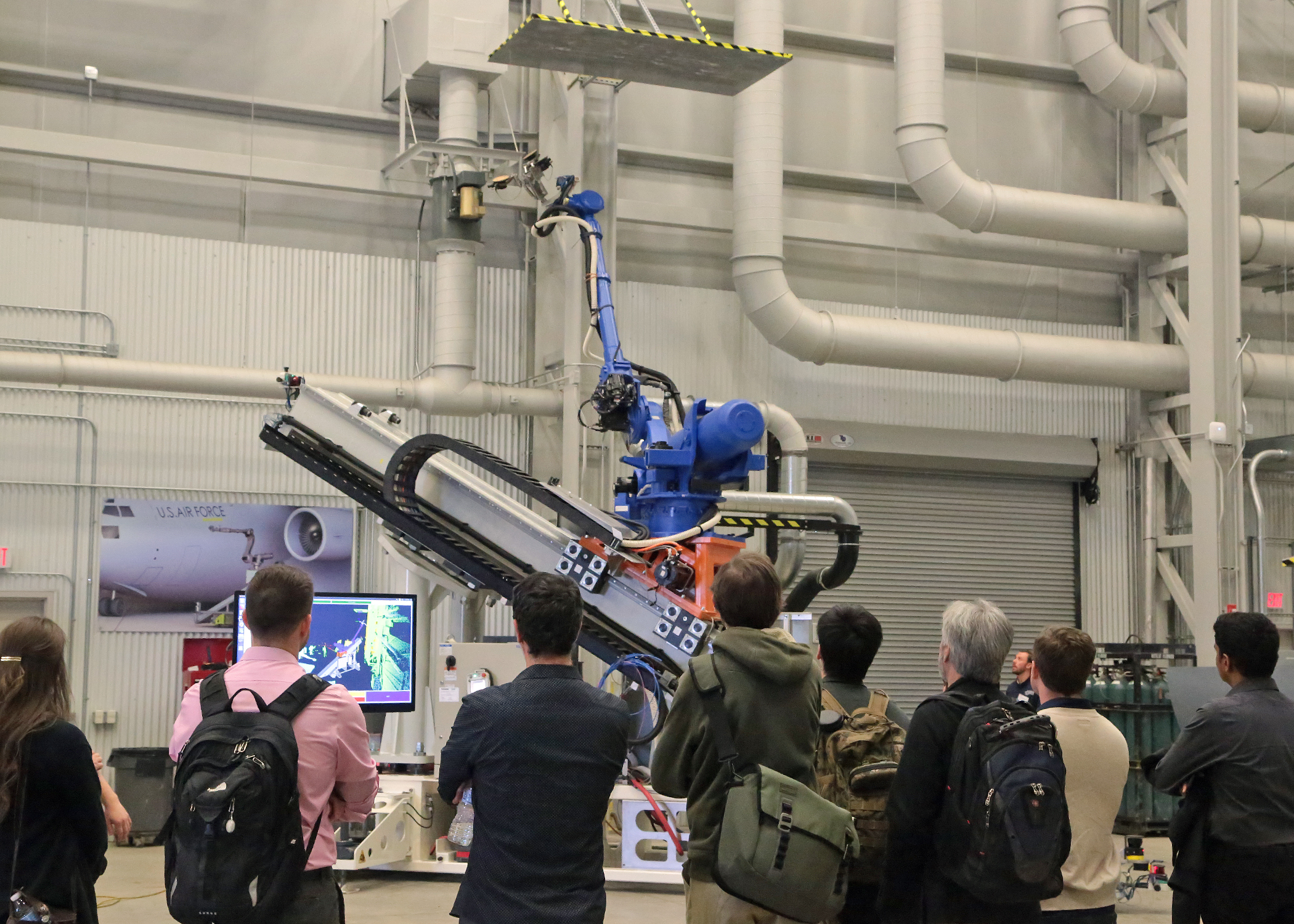

![20190507_090637[1].jpg](https://images.squarespace-cdn.com/content/v1/51df34b1e4b08840dcfd2841/1557762657452-AV4EXOUCNSCB2NU3CVDB/20190507_090637%5B1%5D.jpg)

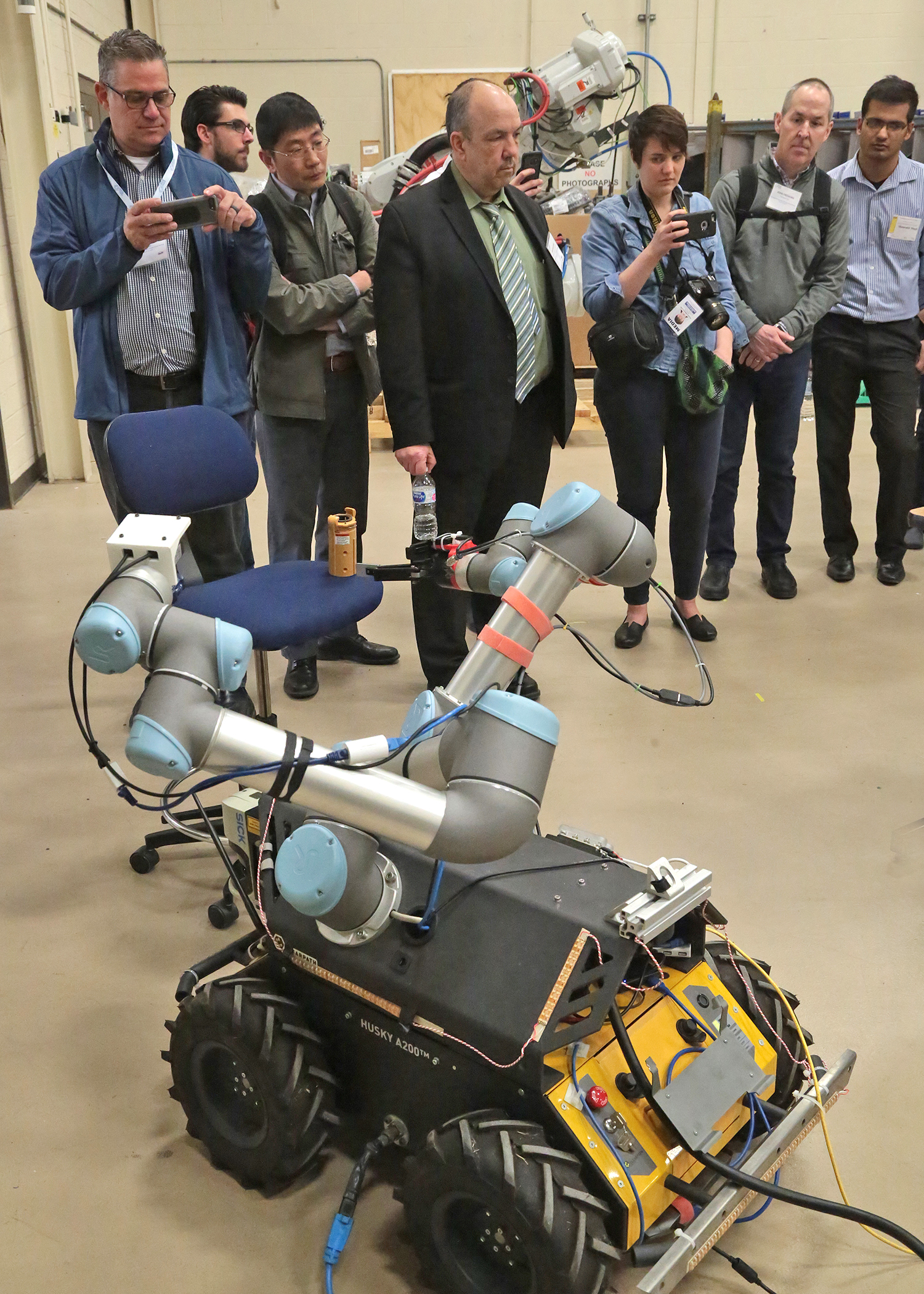

![20190507_113032[1].jpg](https://images.squarespace-cdn.com/content/v1/51df34b1e4b08840dcfd2841/1557762750934-ICC3ROHLLWEUJKIM9VSW/20190507_113032%5B1%5D.jpg)